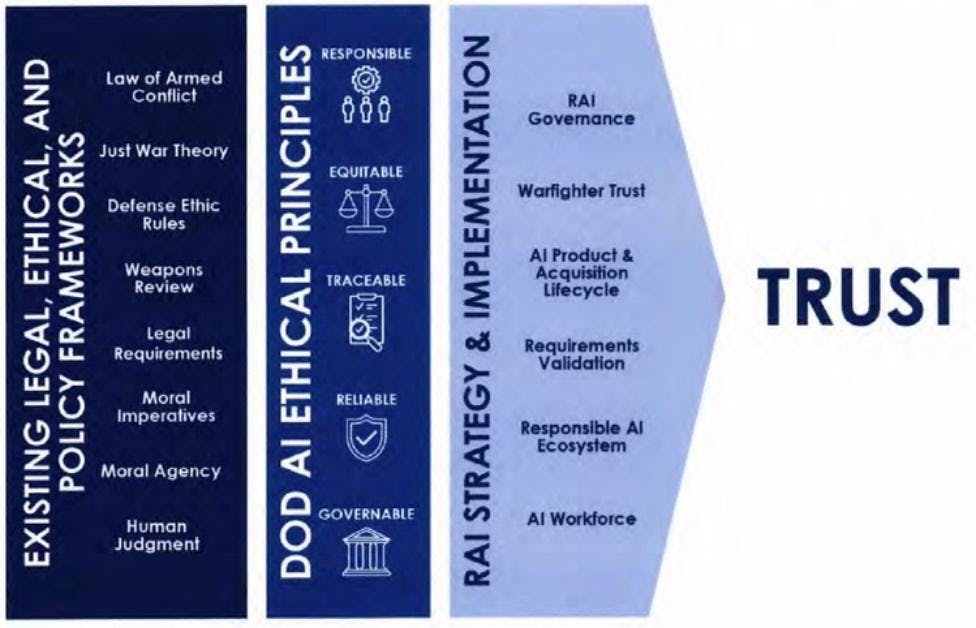

Appendix 3. Laws, Ethical Frameworks, and Policies

One list of relevant frameworks is provided by the RAI Strategy & Implementation Pathway (fig. 2, p. 7):

- The Law of Armed Conflict: For more info see the DoD Law of War Manual

- Just War Theory: A framework for assessing the moral justifications of and limitations to war

- “[T]he just war criteria provide objective measures from which to judge our motives. The effective strategist must be prepared to demonstrate to all sides why the defended cause meets the criteria of just war theory and why the enemy’s cause does not. If a legitimate and effective argument on this basis cannot be assembled, then it is likely that both the cause and the strategy are fatally flawed.” [MARINE CORPS DOCTRINAL PUBLICATION 1-1, Strategy, 93, 95 (1997)]

- Defense Ethic Rules: DoD-wide ethics policies and regulations. For more information, refer to DoD SOCO.

- Legal Requirements: US Domestic Law, applicable international law and treaties, etc.

- Privacy and Security Requirements: US Privacy and security requirements mandated by statue, regulation, standard, or policy.

- Moral Agency: Systems should be designed to preserve human agency where appropriate, and accountability flows should be established such that humans remain responsible for the system.

- c.f. “Humans are the subjects of legal rights and obligations, and as such, they are the entities that are responsible under the law. AI systems are tools, and they have no legal or moral agency that accords them rights, duties, privileges, claims, powers, immunities, or status. However, the use of AI systems to perform various tasks means that the lines of accountability for the decision to design, develop, and deploy such systems need to be clear to maintain human responsibility. With increasing reliance on AI systems, where system components may be machine learned, it may be increasingly difficult to estimate when a system is acting outside of its domain of use or is failing. In these instances, responsibility mechanisms will become increasingly important.” [see p. 27 of the Supplement to the DIB AI Ethics Report].

- Human Judgment: Systems should be designed such that humans decision makers exercise appropriate levels of human judgment over the outputs. What constitutes “appropriate levels” will depend on the context.

For additional guidance, see NIST’s AI Risk Management Playbook, Govern 1.1