RAI Toolkit

The Reliable Artificial Intelligence (RAI) Toolkit provides a centralized process that identifies, tracks, and improves alignment of AI projects to RAI best practices and the DoD AI Ethical Principles, while capitalizing on opportunities for innovation. The RAI Toolkit provides an intuitive flow guiding the user through tailorable and modular assessments, tools, and artifacts throughout the AI product lifecycle. The process enables traceability and assurance of reliable AI practice, development, and use.

Getting Started

The RAI Toolkit is a self-assessment tool designed to help teams evaluate AI projects throughout the development lifecycle. We recommend creating an account to save projects, collaborate with others, and track progress over time.

Once logged into an account, use the workspace dropdown (top right) to join or create an organization workspace for team collaboration.

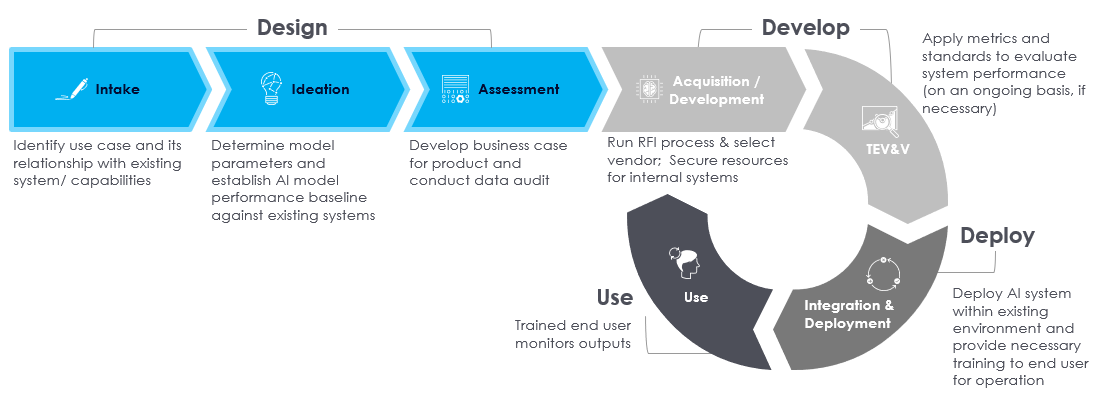

AI Product Life Cycle

Overview of RAI Activities throughout the Product Life Cycle

Additional Parts of the Toolkit

RAI Toolkit Help

Get guidance on creating accounts, saving your work, managing projects, and using key features like filters and the responsibility matrix. Learn how to export PDFs and collaborate effectively with your team.

RAI Tools List

A collection of tools and resources to help you design, develop, and deploy reliable AI applications.