Appendix 1. DAGR

Defense AI Guide on Risk (DAGR)

The Responsible Artificial Intelligence (RAI) Defense AI Guide on Risk (DAGR) is intended to provide DoD AI stakeholders with a voluntary guide to promote holistic risk captures and improved trustworthiness, effectiveness, responsibility, risk mitigation, and operations.

The DAGR’s primary purpose is to highlight applicable RAI concepts, holistically guide risk evaluation, provide abstracted risk models to manage risk, and an approach to quantify the holistic risk of AI capabilities while promoting responsibility, trust, and the United States National Security Strategy. Further, DAGR aligns to the DoD AI Ethical Principles, NIST AI Risk Management Framework (AI RMF), best practices, other governing Federal and DoD guidance (including other DoD risk management guidance), and the considerations from allies and partners of the United States.

There are seven guiding dimensions of risk within the NIST AI RMF. According to the AI RMF, units should strive to ensure AI platforms and applications are (1) valid and reliable, (2) safe, (3) secure and resilient, (4) explainable and interpretable, (5) privacy-enhanced, (6) fair – with harmful bias managed, and (7) accountable and transparent. Further expansion of the seven guiding dimensions of AI risk can be found in the latest NIST AI RMF document. It is important to note that the series of questions provided by DAGR are not intended to serve as go/no-go criteria nor authority-to-operate (ATO), but rather, to serve as a guide to promote risk thought and assessment with AI capabilities.

Several approaches to examine risk and impacts are used within private and public domains, such as the Strengths, Weaknesses, Opportunities, and Threats (SWOT) analysis or Political, Economic, Social, and Technological (PEST) analysis. In support of additional societal concerns, and federal regulations and guidance (such as Presidential Executive Orders and The Department of Defense elevating climate change as a national security priority), DAGR expands upon the PEST model to provide a novel approach, referred to as a STOPES analysis.

A STOPES analysis examines the Social, Technological, Operational, Political, Economic, and Sustainability (STOPES) factors.

Stopes refers to a mining concept of excavating a series of steps or layers, and the STOPES acronym is fitting to the concept of risk management within AI due to the significant implications that may arise from an AI capability and the requirement to analyze and explore layers of impacts and risk across multiple disciplines. As AI stakeholders refer to the guidance provided here, it is important to incorporate a STOPES analysis to fully realize the risks of AI capabilities and mitigate accordingly.

Risk is a dynamic concept, which is realized in context, therefore, it is prudent to evaluate risk not only throughout the operational window of an AI capability, but across the entire lifecycle. In addition to being dynamic, risk may be realized due to relationships with other AI capabilities or the environment it operates within. It is the responsibility of the decision maker, commander, and warfighter to appropriately assess risk throughout the lifecycle.

The DAGR deliberately utilizes the mnemonic to symbolize how – contrary to a common objection – RAI is not an impediment to warfighter effectiveness, but instead promotes increased capability to the warfighter and support for commander intent, all within acceptable risk parameters. Additionally, when performed appropriately, RAI earns the confidence of the American public, industry, academia, allies and partners, and the broader AI community to sustain our technological edge and capability from the boardroom to the battlefield.

The content of this document reflects recommended practices. This document is not intended to serve as or supersede existing regulations, laws, or other mandatory guidance.

1. Profiles

According to the NIST AI RMF, use-case profiles are implementations of the AI RMF functions, categories, and subcategories for a specific setting or application based on requirements, risk tolerance, and resources of the framework user. For example, an AI RMF operational commander/decision maker profile or AI RMF cybersecurity profile may have different risks to highlight and address throughout the AI capability lifecycle.

The DAGR’s primary purpose is to highlight applicable RAI concepts, holistically guide risk evaluation, and provide an abstracted risk model to mitigate risk of AI capabilities while promoting responsibility and trust.

Future expansions of the DAGR may include risk highlights, questions, and mitigations for appropriate profiles. For example, an AI RMF operational commander/decision maker profile guide may be produced that delineates appropriate risk factors that must be evaluated at this level of responsibility, while also abstracting more technical and detailed factors that will be addressed by other profiles but must still be considered by the operational commander/decision maker. Another example may be an AI RMF data science profile that would conduct detailed risk evaluations with emphasis on data, such as computational biases, but the operational commander/decision maker profile must ensure it is addressed and mitigated appropriately without necessarily focusing on the technical details.

2. Introduction and Key Concepts

The DoD has made significant progress in establishing policy and strategic guidance for the adoption of AI technology since the 2018 National Defense Strategy (NDS) that highlighted the Secretary of Defense’s recognition of the importance of new and emerging technologies, to include AI. Since then, the DoD has released the “DoD AI Ethical Principles”, which highlights that the following principles must apply to all DoD AI capabilities, encompassing both combat and non-combat applications:

- Responsible: DoD personnel will exercise appropriate levels of judgement and care, while remaining responsible for the development, deployment, and use of AI capabilities.

- Equitable: Deliberate steps must be taken to minimize unintended bias in AI capabilities.

- Traceable: DoD AI capabilities will be developed and deployed such that relevant personnel possess an appropriate understanding of the technology, development process, and operational methods applicable to AI capabilities, including with transparent and auditable methodologies, data sources, and design procedure and documentation.

- Reliable: DoD AI capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness of such capabilities will be subject to testing and assurance within those defined uses across their entire lifecycle.

- Governable: AI capabilities will be designed and engineered to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences, and the ability to disengage/deactivate deployed systems that demonstrate unintended behavior.

In addition to the established DoD AI Ethical Principles, the National Institute of Standards and Technology (NIST) released the AI RMF in early 2023. Within this framework, stakeholders should ensure that AI platforms and applications mitigate risk to an acceptable level across the seven guidelines. For the remainder of this document, AI systems, models, platforms, and applications will be referred to as AI capabilities from here on out. The seven guidelines are:

- Valid and Reliable: Factors to confirm, through objective evidence, that the requirements for a specific intended use or application have been fulfilled. Also included are factors to evaluate the ability of an capability to perform as required, without failure, for a given time interval, under given conditions.

- Safe: Factors that ensure that under defined conditions, AI capabilities should not lead to a state in which human life, health, property, or the environment are endangered.

- Secure and Resilient: Factors to evaluate AI capabilities and an ecosystem’s ability to withstand unexpected adverse events or unexpected changes in their environment or use. This includes factors related to robustness, maintainability and recoverability.

- Accountable and Transparent: Factors related to the extent to which information about an AI capability and its outputs are available to stakeholders interacting with an AI capability.

- Explainable and Interpretable: Factors related to the extent to which the operational mechanisms of an AI capability can be explained, and the meaning of an AI capability’s output is as designed for operational purposes (interpretability).

- Privacy-Enhanced: Factors related to the norms and practices that contribute to the safeguarding of human autonomy, identity, and dignity.

- Fair — with Harmful Bias Managed: Factors related to the concerns for equality and equity due to harmful bias and discrimination. Computational factors are generally focused on, but emphasis must be placed on human and systemic biases. Computational bias may occur when a sample is not accurate or representative of the population/subject in question. Human bias may occur due to systemic errors in human thought and perception. Systemic bias may occur from beliefs, processes, procedures, and practices that may result in advantages and disadvantages to various social groups.

It is vital to the concept of RAI that both the DoD AI Ethical Principles and AI RMF be considered together to capture a greater risk picture.

3. AI Risk Relationship Dynamics

When exploring and assessing AI risk, there are several risk relationship dynamics to consider prior to the deployment of an AI capability.

AI risk has a shifting dynamic, meaning that throughout the lifecycle of an AI capability, overall risk may shift and become more or less impactful.

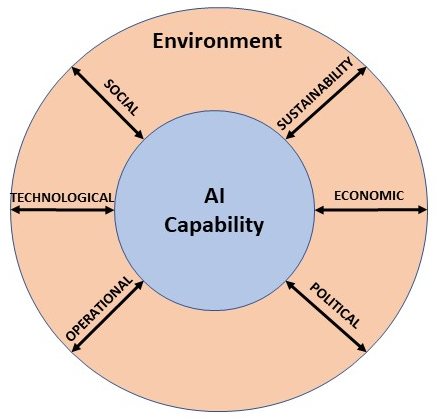

AI risk may have a bidirectional dynamic with the environment. This implies that an AI capability may influence the environment it operates within, and the environment may influence the AI capability. Figure 1 represents this potential bidirectional dynamic between the AI capability and the environment.

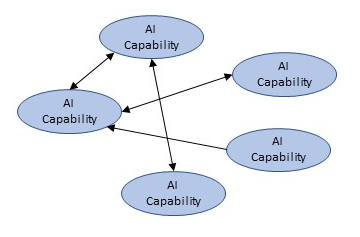

Risks between AI capabilities may be interconnected because of a dependency between AI capabilities. This implies that residual risk for an AI capability may affect the risk of another AI capability, if they share a dependency. The risk dynamic between dependent AI capabilities does not necessarily have to be bi-directional, meaning that if a dependency between two capabilities exists, it does not have to be a bi-directional dependency. This risk dynamic may only be realized if there is a dependency between two capabilities. A dependency is when one capability requires another capability to perform it’s designated function.

4. Risk Factors, Risk Assessment and a Heirarchy of AI Risk

During the lifecycle of an AI capability, it is critical for AI stakeholders to evaluate the shifting, interconnected, and bidirectional dynamics of risk across the social, technological, operational, political, economic, and sustainability (STOPES) factors. The below descriptions of the STOPES factors may not address every component or concern related to a factor, therefore, it is the responsibility of the decision maker to ensure all appropriate risk factors are considered. Within this section, the concepts of STOPES and high-level risk perspectives will be presented to guide further in-depth risk discussion and assessment.

Important note: Before reviewing the STOPES factors, it is necessary to highlight that supply chain risks may be evaluated across several of the STOPES factors. Common supply chain risks include poor contractor and/or supplier performance, labor shortages, funding, geopolitical, cyber, environmental and reputational risk, to highlight a few.

Reviewing the above list for supply chain risks as an example, it can be surmised that cyber supply chain risks related to software and hardware would reside within technological factors, but poor contractor and/or supplier performance, labor shortages, and funding would be categorized within economic factors of the STOPES analysis.

Social Factors: Factors related to community, social support, income, education, race and ethnicity, employment, and social perceptions.

Technological Factors: Factors related to the organizational impacts of a technological capability being inoperable, compromised or operating incorrectly, and appropriate supply chain risks related to technology and security.

Operational Factors: Factors that may result in adverse change in resources resulting from operational events such military (combat and non-combat) operations, operations inoperability or incorrectness of internal processes, systems, or controls, to also include external events, and appropriate supply chain risks related to operations. Operational factors also include reputation, legal, ethical, and human-machine interaction and the corresponding feedback loop of this interaction.

Political Factors: Factors related to government policy, changes in legislation, political climate, and international relations.

Economic Factors: Economic factors that may influence the organization, such as access to funding, acquisition processes and vehicles, labor costs and workforce skill, market conditions, and appropriate supply chain risks related to economics.

Sustainability Factors: Factors related to human-, environment-, social-, and economic-sustainability. The intersection and balance of environment, economy, and social equity support sustainability initiatives. It is important to note that the social sustainability and economic sustainability factors are specialized topics within the aforementioned economic and social categories and are recommended to be highlighted here. Factors include concepts related to climate change, the environment, energy usage, social responsibility, human security, and appropriate supply chain risks related to sustainability.

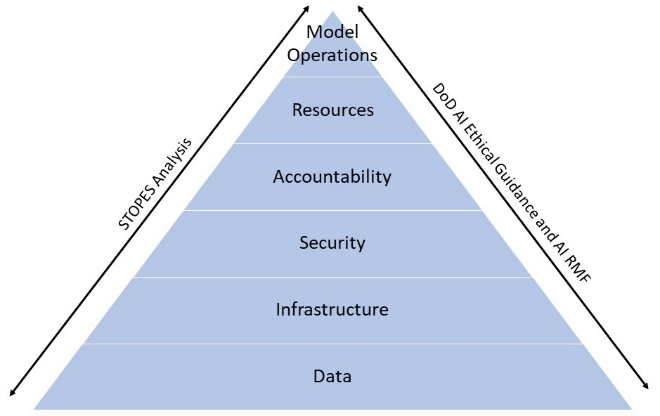

When evaluating risk across the STOPES factors, we recommend utilizing the DISARM hierarchy of AI risk. DISARM is an acronym for data, infrastructure, security, accountability, resources, and model operation. The DISARM hierarchy is intended to serve as an abstracted model to prioritize the evaluation and mitigation of risk of an AI capability. It is important to note that addressing the risk at a lower level of the hierarchy does not mean that a stakeholder can move up the hierarchy and ignore risk factors of lower levels at later intervals of the AI capabilities lifecycle.

Within the DISARM hierarchy of AI risk, AI stakeholders should strive to mitigate risks associated with data first since the collection, cleaning, and labeling of data are critical in reducing bias and promoting validity, reliability, effectiveness of AI capabilities, and mitigating risk at the higher levels of the hierarchy.

Continuing along the hierarchy, the AI stakeholder will continue evaluating risk and factors associated with infrastructure, security, accountability, resources, and model operations, in order. Without adequately mitigation risk at the lower levels, AI stakeholders cannot expect risk to be appropriately mitigated at the higher levels, and for the AI capability to operate as intended. Figure 3 depicts the DISARM hierarchy of AI risk.

Below is a description of each layer of the DISARM hierarchy of AI risk, but this is not intended to be an all-inclusive list.

- Data: The foundation of the DISARM hierarchy, is the necessity to evaluate factors associated with data, which include ensuring that data is collected appropriately (manual and/or automated collection), data is accurate, integrity is maintained, and biases are mitigated.

- Infrastructure: Factors related to the infrastructure in which data and capabilities traverse or interact, to include any sensors. Infrastructure factors also includes elements that may impact the availability of data to support AI capabilities.

- Security: Factors related to the security, resiliency, and privacy of data, technology, and people. Security factors include elements that may impact the confidentiality, integrity, availability, authentication, and non-repudiation of AI capabilities. Several cybersecurity risk management frameworks are in existence and selection is dependent on applicable authorities. For example, utilizing the DoDI 8510.01 Risk Management Framework for DoD Systems or the NIST Cybersecurity Risk Management Framework may be appropriate. It is the responsibility of the AI stakeholders to select and use the appropriate (and mandated) cybersecurity guidance.

- Accountability: Accountability is a prerequisite to transparency and includes accountability factors to AI capabilities, society, DoD units/forces, and partners and allies. Accountability also involves evaluating factors related to an AI capability being valid, reliable, explainable, and interpretable. By addressing these risk factors, AI stakeholders are able promote an acceptable level of trust and confidence in operations using an AI capability.

- Resources: Factors related to people, equipment, or ideas available to respond to a threat or hazard to an AI capability.

- Model Operations: Factors related to a the correct operation of a model or AI capability during the operational window.

5. AI Capability and Risk Lifecycle

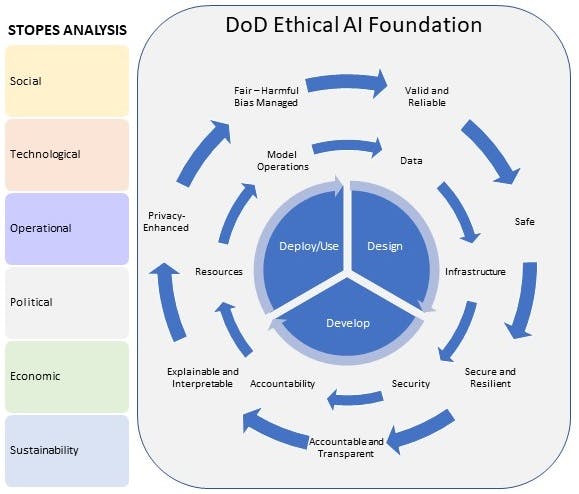

According to the DoD Responsible AI Strategy and Implementation Pathway, the AI product lifecycle consists of the iterative activities of design, develop, deploy, and use. Equally iterative in nature is the need to evaluate and address risk throughout the AI lifecycle and across activities.

For simplicity, Figure 4 combines Deploy and Use within the same phase. The AI capability lifecycle consists of the following phases:

- Design: Consists of the activities of intake, ideation, and assessment. Within design, it is necessary to identify the use case, relationship with other existing capabilities, collect requirements, finalizing desired outcomes and objectives, conceptualizing and designing the AI capability, and evaluate available resources for the problem.

- Develop: According to the Defense Innovation Unit, this phase refers to the iterative process of writing and evaluating the AI capabilities program code. Also, all development stakeholders should focus on the management of data models, continuous monitoring, output verification, audit mechanisms, and governance roles.

- Deploy/Use: This phase encompasses the processes of utilizing the AI capability during the operational window, providing training to end-users for operations, and monitoring outputs.

- Important note: Various STOPES factors and risks may be less or more relevant during different phases of the AI capability lifecycle.

Although risk is already a complex and dynamic concept, which is further amplified by multifaceted operations and requirements of the Department, by leveraging the abstractions provided in the DAGR, the complexity of portraying risk in the context of AI capabilities can be simplified. Figure 4 describes the following interactions:

- The necessity to prioritize and evaluate risk through the DISARM hierarchy of AI risk throughout the AI capability lifecycle (design, develop, and deploy/use).

- Addressing the risk guidelines of valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, and privacy-enhanced from the NIST AI RMF and the DoD AI Ethical Principles through the prioritization of the DISARM hierarchy of AI risk.

- Utilizing the STOPES analysis to produce relevant factors and risks of an AI capability while accounting for the NIST AI RMF guidelines. For each risk, evaluation of the corresponding probability of occurrence and consequence are needed.

- For all described risks, decide upon a risk strategy of accept, mitigate, transfer, or avoid.

6. STOPES AI Risk Considerations

When evaluating risk for an AI capability, it is important to start the analysis from a foundational level and expanding the risk assessment based on the capability, operational need, and other external factors. The following initial guiding questions are not intended to be an all-inclusive review of risk against the DoD AI Ethical Principles and guiding principles of the NIST AI RMF through a STOPES analysis. But rather, to serve as a starting point to promote effective risk dialogue.

SOCIAL

- What are, if any, the positive and negative social and societal implications (domestic or international) if the AI capability performs as designed – and if it does not perform as designed? For example, what may the impacts be to the local community or internal employees if an AI capability is inaccurate or unreliable?

- What are, if any, the risk of physical and mental harm to individuals, communities, society, or other nations if an AI capability performs as designed – and if it does not perform as designed? What are the risks if an AI capability does perform as designed?

- What are, if any, the risk of misinformation or disinformation from a societal perspective if an AI capability does not perform as designed?

TECHNOLOGICAL

- How is the AI capability capable of functioning appropriately with anomalies?

- How is the AI capability able to maintain acceptable functionality in the face of internal or external change?

- How is the AI capability capable of degrading safely, gracefully, and within acceptable risk parameters, when necessary?

- Is the AI capability assessed for resiliency and security against adversarial actions or activities? If so, what people, policy, process, and technology mitigations are in place?

- How is data collected, what is the scope of data validation, and how is bias mitigated?

- What impacts to the 16 critical infrastructure sectors may be realized with an AI capability not performing as designed? Refer to Department of Homeland Security Cybersecurity and Infrastructure Security Agency (DHS CISA) Critical Infrastructure Sectors. Apply to domestic and international critical infrastructure, while accounting for additional regional requirements.

- The 16 critical infrastructure sectors are: (1) chemical, (2) commercial facilities, (3) communications, (4) critical manufacturing, (5) dams, (6) defense industrial base, (7) emergency services, (8) energy, (9) financial services, (10) food and agriculture, (11) government facilities, (12) healthcare and public health, (13) information technology, (14) nuclear reactors, materials, and waste, (15) transportation systems, and (16) water and wastewater.

OPERATIONAL

- Has the AI capability objective been formulated and formally authorized for use to satisfy an operational objective or requirement?

- What are, if any, the risk of maintaining operations of the AI capability due to a change of available operational resources?

POLITICAL

- What are, if any, the positive and negative political implications if the AI capability performs as designed – and if it does not perform as designed?

- What are, if any, the possible perceptions of non-partisan government support?

- What are, if any, the risks to the political structure of the United States or other nations that may result from an AI capability not performing as designed?

- What are, if any, the risk of misinformation or disinformation to the political system or elections of the United States if an AI capability does not perform as designed?

ECONOMIC

- What are, if any, the risk of undesirable economic or acquisition impacts if the AI capability performs as designed – and if it does not perform as designed?

- What are, if any, the economic risks and implications of the AI capability operating incorrectly or inappropriately, which results in death, operational losses, degradation of health, destruction of property, or negative effects to the environment?

SUSTAINABILITY

- What are, if any, the risks of undesirable environmental impacts that do not align with Executive Orders, DoD Guidance, and international laws/accords if the AI capability performs as designed – and if it does not perform as designed? For example, are there any risks to scope 1, 2, and 3 emissions due to the use of an AI capability?

- What are, if any, the risks of undesirable affects to environmental factors that may endanger human life or property, climate change, sustainable use and protection of water and marine resources, or overall protection of the ecosystem if the AI capability performs as designed – and if it does not perform as designed?

7. AI Risk Evaluation Process

When evaluating AI capability risk, there are three general categories of evaluation: (1) independent AI capability (no dependencies), (2) unidirectional dependency between AI capabilities, and (3) bidirectional dependency between AI capabilities. Each of these processes will be explained below, but first, it is necessary to highlight how each described risk is evaluated for an individual AI capability.

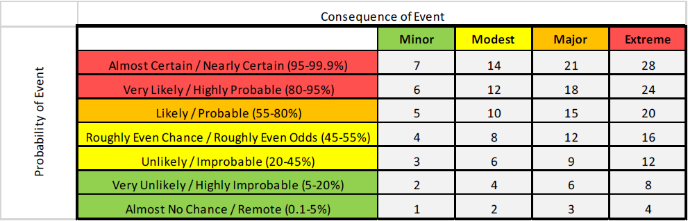

When conducting a STOPES analysis, each risk must be qualitatively assessed with the following risk matrix and corresponding numerical values, as shown in Figure 5. Prior to beginning, it is necessary for the risk assessors to describe what consequences are considered extreme, major, modest, or minor. The probability of events are described as very unlikely (~0-20%), unlikely (~21-50%), likely (~51-80%), and very likely (~81-100%).

For each risk, the evaluator will assess the probability of occurrence against the consequence of the event and select the numeric value from the table. This step is to be repeated for every risk.

The next step is to select risk mitigation strategies and controls for each risk and re-evaluating the probability of event and consequence. The new residual risk score is produced by selecting the numeric value of the updated probability and consequence of event and is known as the residual risk.

Appropriate stakeholders must determine acceptable risk thresholds after mitigations have been selected. For example, stakeholders may determine if the acceptance of risk is a factor of ensuring each risk is below a predetermined threshold, and/or if the sum of residual risk for each STOPES factor is below a predetermined threshold. Another example may be that stakeholders have determined that an AI capability may be within an acceptable range of risk if every risk is below a value of 6, then there is zero risk of human and environmental harm, and the sum of each STOPES factor is below 30. Once again, it is the responsibility of the decision maker to explicitly delineate acceptable risk parameters.

If acceptable risk thresholds have not been achieved, then the fielding of an AI capability should be escalated appropriately.

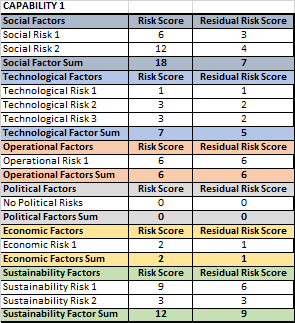

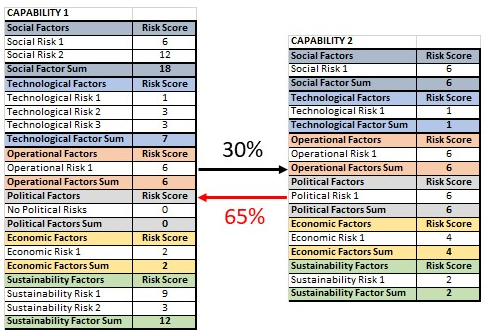

Independent AI Capability (No Dependency) By performing the aforementioned steps of evaluating each risk from the probability of occurrence against the consequence, each risk is to be categorized within one of the social, technological, operational, political, economic, and sustainability (STOPES) factors. Once each risk and value has been annotated within the respective category, all scores for the category are added and annotate as the final sum for the category. An example of performing these steps is annotated in Figure 6. For example, from Figure 6, it should be noted that this AI capability has three technological risks with risk scores of 1, 3, and 3, with a total category sum of 7.

The next step is to implement one of the four risk mitigation strategies: accept, transfer, mitigate, and avoid. After a mitigation strategy has been selected for each risk, it is necessary to re-evaluate the risk score from risk matrix. Using Figure 6 as an example, assume a risk mitigation strategy was selected for Social Risk 2, which changed the probability of the event from likely to very unlikely, but the consequence of event remained as extreme. In this case, the risk value would decrease from 12 to 4. All residual risk scores for each factor are to be summed again.

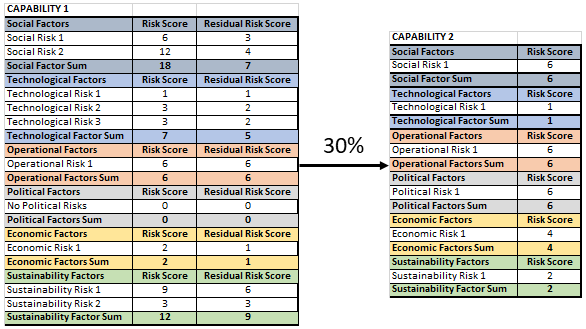

Unidirectional Dependency of AI Capabilities For unidirectional dependencies, risk for each capability is calculated as an independent capability. The second step is to evaluate the strength of the dependency between the two AI capabilities, as a percentage between 0-100%. The third step is to take the factor sum of each category (after mitigation) from the Influencing Capability and multiplying this value by the relationship percentage. This calculated value is known as the Derivative. The final step is to take the Derivative for each of the factor categories and adding it to the corresponding Factor Sum of the Influenced Capability. This new value is known as the Dependency Sum.

Important note: It is important to examine whether or not a derivative should be accounted for when assessing the risk of the Influenced Capability.

Using an example, as shown in Figure 7, two AI capabilities share a unidirectional relationship, in which Capability 1 influences Capability 2. For this example, it is also assumed that each derivative is to be accounted for in Capability 2. Therefore, Capability 1 is known as the Influencing Capability and Capability 2 is known as the Influenced Capability.

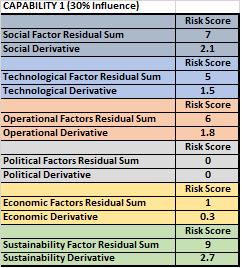

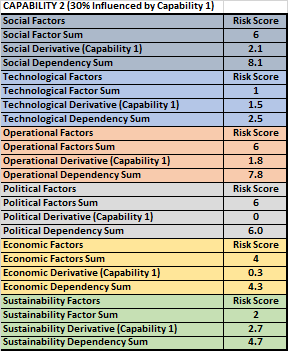

Figure 8 demonstrates the Derivative from Capability 1, by multiplying each of the Residual Factor Sum values by 30% (0.30). For example, Capability 1 has a Residual Social Factor Sum of 7 and is multiplied by the relationship strength of 0.30, resulting in a Social Derivative of 2.1.

Each of the Derivative values from Capability 1 are added to the Factor Sum of Capability 2, resulting in the Dependency Sum, which is depicted in Figure 9. For example, Capability 1 produces an Economic Derivative of 0.3, which is added to the Economic Factors Sum of 4 from Capability 2, resulting in an Economic Dependency Sum of 4.3 for Capability 2.

The final Dependency Sum values for each STOPES factor is the final calculated risk for the capability.

Bidirectional Relationship of AI Capabilities For bidirectional relationships, risk for each capability is calculated as an independent capability. Then the same steps are performed as described in the Unidirectional Relationship of AI Capabilities section, but both directions must be addressed. This is because the strength of relationship between two capabilities may be different. For example, Figure 10 depicts that Capability 1 has a 30% influence over Capability 2, but Capability 2 has a 70% influence over Capability 1.

8. Future Works and DAGR Roadmap

When evaluating risk for an AI capability, it is important to start the analysis from a foundational level and expanding risk assessment based on the capability, operational need, and other external factors. The contents of this document are not intended to be an all-inclusive review of risk against the guiding principles of the NIST AI RMF through a STOPES analysis, but rather, to serve as an initial guiding document to promote effective risk evaluation and dialogue of AI capabilities.

The proposed DAGR future works and roadmap will highlight the following:

- More detailed risk questions and mitigations that incorporate the STOPES factors across the DoD AI Ethical Principles and the NIST AI RMF directed at various profiles and AI stakeholders. For example, data scientists, AI/ML engineers, cybersecurity professionals, and operational managers at various levels.

- Continued iterations and refinements to the DAGR.

- Potential development of a risk evaluation tool that accounts for relationships between AI capabilities and effective visualization of risks. Also, further expansion of modeling correlation and dependencies, as well as testing and modeling causal relationships.

- Continued research in quantifying risks further, to possibly include bias and socio-technical factors. Further refine evaluation of dependent risks.