1. SHIELD Intake

1.1 SET: Consider Previously Learned Lessons

- Review similar projects compared to the use case, to include incident repositories to identify RAI-related "lessons learned" that are applicable to the current project.

Document the relevant legal, ethical, policy, and privacy guidance and considerations that emerged from your review. Check Gamechanger, (a comprehensive repository of all DoD statutory and policy-driven requirements) for relevant governance requirements.

If your project involves the use of personally identifiable information (PII), consult with your Privacy Officer to determine if use of the PII: 1) triggers any Privacy Act restrictions or constraints; 2) is consistent with the Fair Information Practice Principles (FIPPs) set forth in OMB Circular A-130, Appendix II; 3) requires creation or modification of a Privacy Impact Assessment (PIA) as mandated by Section 208 of the E-Government Act; 4) requires application of one or more of the Committee on National Security Systems Instruction (CNSSI) No. 1253, Privacy Overlays; 5) involves protected health information (PHI) and requires application of NIST SP 800-66, Rev. 2, Implementing the HIPAA Security Rule: A Cybersecurity Resource Guide, to the project; and, 6) necessitates use of privacy-enhancing cryptograph techniques in addition to differential privacy methods to improve the privacy posture for this project.- Begin to plan how novel artifacts and new lessons learned from the current project will be captured for storage in these repositories (see section 7.4)

1.2 SET: Determine Relevant Laws, Ethical Frameworks, and Policies

- Identify relevant legal, ethical, risk and policy frameworks and assess their applicability. [See Appendix 6]

- Decide upon which of the relevant laws, regulations, policies, and frameworks (legal, ethical, risk, etc.), are applicable to the project and document the supporting rationale behind these determinations of applicability. Ensure the AI project’s downstream documentation will align with the artifacts and standards that correspond to the laws, regulations, policies, and frameworks that have been decided upon.

- Are the policies, processes, procedures, and practices across the organization mapped to these laws, regulations, policies, and frameworks – and are they transparent and implemented effectively? Are there authoritative references for each applicable framework and have subject matter experts who can be consulted about them been identified? Is there a plan for legal reviews (if applicable)?

- Have you established a procedure to update your reviews if the application domain changes and/or at regular intervals?

- Have you identified the appropriate senior responsible owner(s) who are accountable for holding the ethical, legal, and safety risks for the AI project?

1.3 SET: Identify and Engage Stakeholders

- Identify the relevant stakeholders, subject matter experts, domain experts, and operational users.

- Select a reference stakeholder (if applicable), who can speak authoritatively for, or with representative views of the stakeholder group.

- If certain stakeholders are unavailable to you, identify whether there are other entities that can represent their perspective.

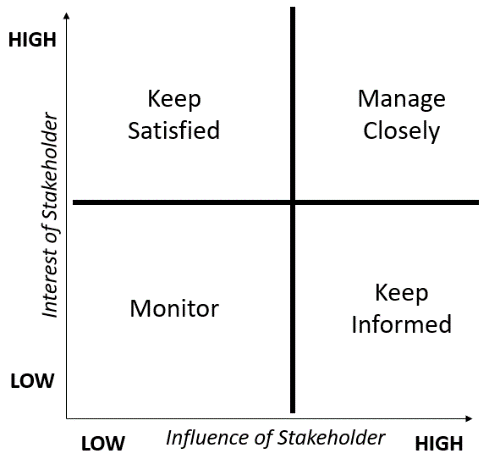

- Map stakeholders (including 2nd/3rd-degree stakeholders) on a stakeholder management map based on level of interest and level of influence. Manage stakeholders appropriately to promote project success.

- Ensure that all stakeholders (including external stakeholders) are identified, and establish how their perspectives/outcomes will be surveyed/tracked throughout the product life cycle. If appropriate, highlight the process that will be used to evaluate and communicate feedback throughout the AI project.

- Initiate preliminary conversations with these groups about the system.

- Create a preliminary plan for processes to engage stakeholder, subject matter and domain experts, and operational users and incorporate their input throughout the planning, development, and deployment stages.

- Has a single authoritative speaker for the project and/or mission commander been identified for engaging stakeholders or speaking externally about the project?

1.4 SET: Concretize the Use Case for the AI

- Begin use case analysis and workflow mapping to inform ideation.

- What is the root cause of the problem?

- What are the project’s impact goals?

- Do you have all relevant use case / mission domains identified, mapped to the expected mission workflow, and defined at a level of detail sufficient for requirements analysis?

- Compose a clear description of how AI supports the mission and which portions of the mission workflow are critical paths.

- Identify whether there are multiple AI-enabled capabilities that will interact with one another to support the mission. Document how they will interact with one another (this is necessary for risk evaluation in DAGR).

- Have you identified the bounds of responsible/intended uses for the system?

- How will compromise or misuse of the system be identified?

- Have you begun to assemble your concept of operations (CONOPS)? Is a concept of employment (CONEMPS) needed to elaborate details?

- Are all relevant use case and mission domains identified, mapped to the expected mission workflow, and defined at a level of detail sufficient for requirements analysis?

- What degree of direct human control is needed for the system once deployed? Is there a procedure for when automated decisions or activities of the system will require human approval or to be marked out for human oversight?

1.5 SET: Decide to Proceed to Ideation

- Begin thinking through how RAI-related issues will be identified, tracked, and communicated to relevant personnel.

- Decide the process through which Users, Requirements Owners, and Acquisition members will share information about the current status of the project.

- Establish how the project's alignment with the DoD AI Ethical Principles will be monitored and measured. Decide how risk management frameworks such as DAGR; Executive Dashboards will be used to this effect.

- Conduct a feasibility analysis for the project, including a data availability analysis.

- Do you have the appropriate approvals to proceed?